Union Minister Ashwini Vaishnaw says India's proactive 'techno-legal' approach to regulating AI and deepfakes is a global benchmark. He noted India's 'template' for AI governance has received international acclaim, with new IT rules now in effect.

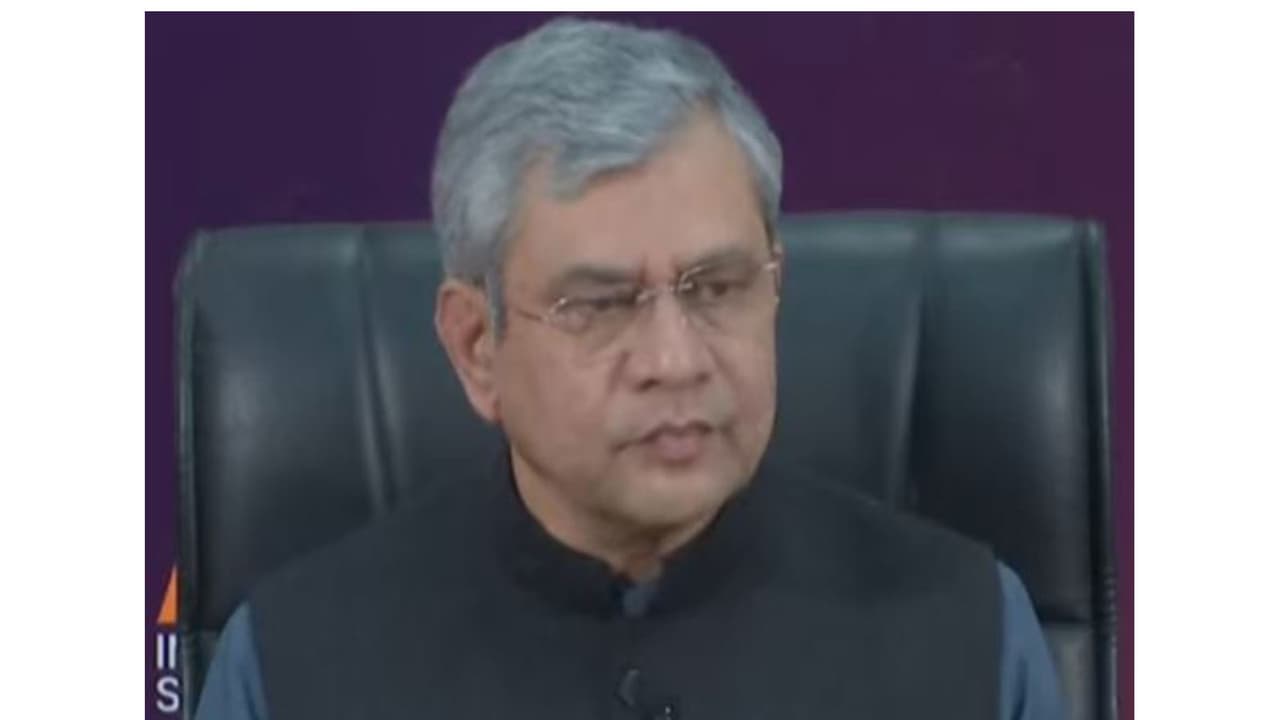

Union Electronics and Information Technology Minister Ashwini Vaishnaw on Friday emphasised the urgency of regulating Artificial Intelligence (AI) and deepfakes, noting that India's proactive "techno-legal" approach is increasingly being viewed as a global benchmark. Addressing the closing Press Briefing on the India AI Impact Summit 2026, the Union Minister stated that the global community is moving decisively toward regulation and revealed that India's specific "template" for AI governance has received international acclaim.

He was responding to a question on the evolving challenges of Synthetic Generation of Information (SGI) and deepfakes. "Many countries are already moving to bring in regulations on SGI. Many have congratulated India on our approach. In fact, three countries have explicitly said they would like to make their framework like India's. Our template is 'bahut accha' (very good)," Vaishnaw said.

New IT Rules Target Synthetic Content

India's Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 Amendment Rules 2026 on synthetically generated information (SGI) or synthetic audio-visual content will come into force from today. The amendment targeting deepfakes and AI-generated content was notified by the Ministry of Electronics and Information Technology on February 10, 2026. The new IT rules on SGI require platforms to deploy automated tools to verify whether content is synthetically generated, and to act on the results. If a platform is found to have knowingly allowed synthetic content that violates the rules, it risks the loss of safe harbour protection under Section 79 of the IT Act.

Transparency and Legality as Core Principles

Addressing media queries, Vaishnaw underscored the need for clear boundaries between human-made and machine-generated media, stating that transparency must be the bedrock of AI integration. "There should be transparency on whether it is real content or AI-generated. Watermarking is essential so that the user knows the nature of the information they are consuming," he added.

Vaishnaw clarified that the digital world is not a "lawless frontier". He reiterated that the fundamental legalities of the physical world apply to the internet. He underscored what he termed a constitutional principle underpinning the amendments- that illegality does not change character merely because it migrates online. "In society, what is illegal according to the Constitution and what is illegal in the physical world is also illegal online. We are moving ahead rapidly with a techno-legal framework to ensure this," the minister noted.

Further, the Union Minister said that several countries have congratulated India for taking what he described as a "good initiative." According to him, watermarking and labelling norms for synthetic content are likely to become a global template in the coming years. He added that he has "not found anyone who has opposed it."

PM Modi Urges Global Standards for AI

On February 19, PM Modi also, in his address at the India Impact Summit at Bharat Mandapam, underscored the urgent need for global standards to combat digital threats such as deepfakes, proposing clear authentication measures for AI-generated content. "Let us pledge to develop AI as a global common good. A crucial need today is to establish global standards. Deepfakes and fabricated content are destabilising the open society. In the digital world, content should also have authenticity labels so people know what's real and what's created with AI. As AI creates more text, images, and video, the industry increasingly needs watermarking and clear-source standards. Therefore, it's crucial that this trust is built into the technology from the start," the Prime Minister said.

Stricter Compliance Mandates for Intermediaries

Under the amended Rule 3(1)(c), intermediaries (social media platforms like Facebook, Instagram, YouTube, X and other websites) will now be required to inform users every three months, instead of once a year, about the consequences of violating the platform's terms of service, privacy policy or user agreement. Users must be clearly informed that access or usage rights may be withdrawn or disabled for non-compliance. They may face penalties under applicable laws for creating, generating or modifying unlawful content. Certain offences require mandatory reporting under laws such as the Protection of Children from Sexual Offences (POCSO) Act, 2012 and the Bharatiya Nagarik Suraksha Sanhita (BNSS), 2023.

The amendments mandate that court-ordered or law enforcement-directed takedowns must now be complied with within three hours, as against the earlier 36-hour window. Similarly, platforms must remove non-consensual nudity within two hours, down from 24 hours. (ANI)

(Except for the headline, this story has not been edited by Asianet Newsable English staff and is published from a syndicated feed.)